011: Calibrations Have a Context Collapse Problem

Note: The views and opinions expressed in this content are solely those of the author and do not necessarily reflect the official policy or position of the author’s employer.

What is Context Collapse?

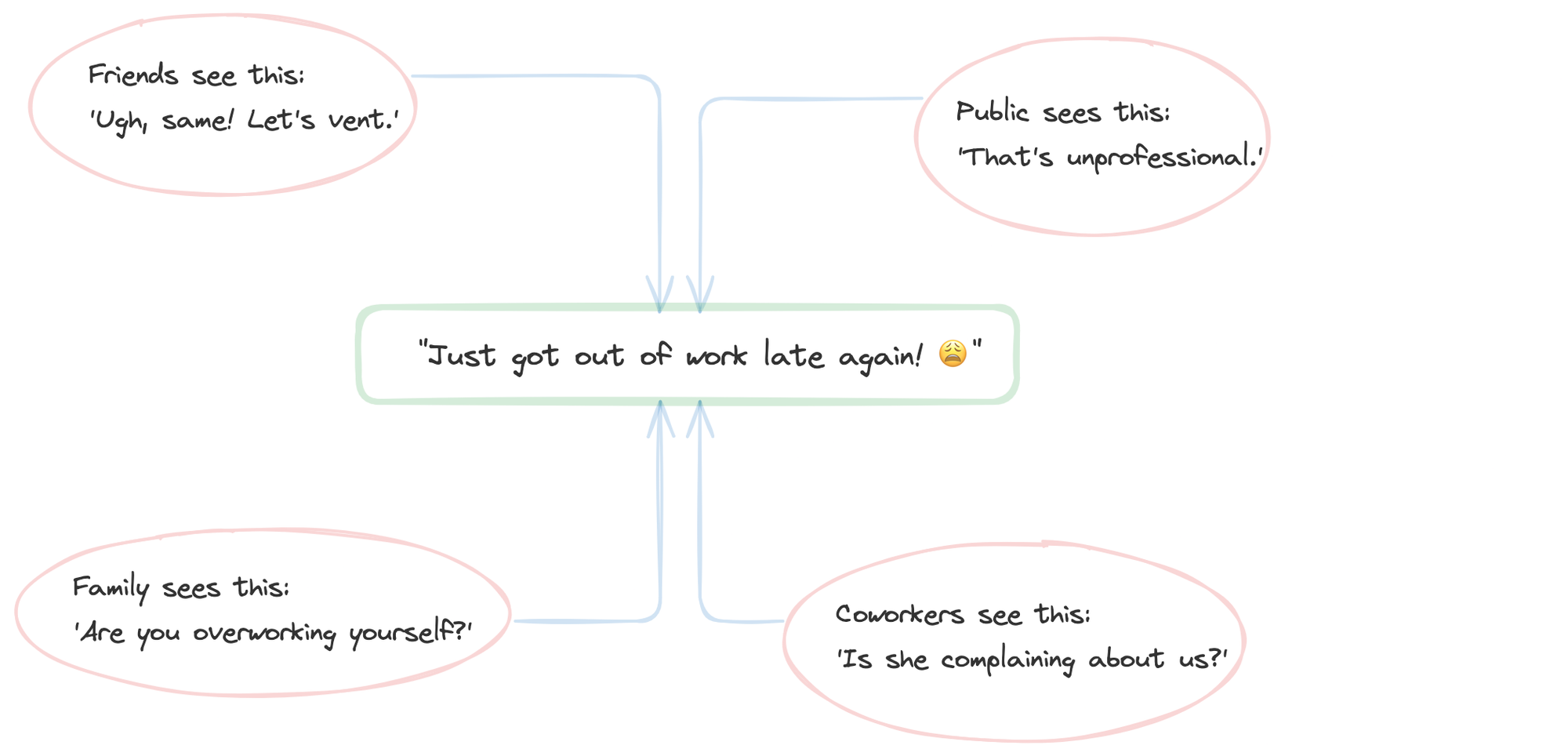

Context collapse occurs when content intended for one audience is consumed by multiple audiences simultaneously, each bringing their own frames of reference and expectations. Originally coined to describe social media dynamics, it's when a message loses its intended context as it travels across different social spheres. (Also the reason why Twitter is a hell-hole).

This same phenomenon doesn't just plague our social media posts, it undermines one of the most critical processes in our organizations: performance reviews. While in social media the cost might be a misunderstanding or awkward conversation, in calibration meetings the stakes are careers, compensation, and team morale.

We're in that room again: five long tables arranged in a square, twenty-five managers hunched over laptops, and the flickering overhead lights that never quite stop buzzing... okay, I'm just making it up — most of us are on Zoom for this. But you get the idea? It's the calibration day. The plan is to be objective and fair. But if there's one thing years in engineering leadership have taught me, it's that the moment we all sit down, context has a habit of crashing in on itself.

The Well-Intentioned Fiction

In theory, calibration is supposed to be the sanity check that keeps us from grading on a curve, but too often it’s just performance review theater. Managers gather to cross-verify each other's assessments, making sure that one engineer's "exceeds expectations" in Team A matches another engineer's "exceeds" in Team B.

In reality? It can be a competitive storytelling hour.

I once watched a manager deliver a passionate three-minute speech about an engineer's "groundbreaking" work on a caching layer. Two minutes in, I realized I had no idea if this was actually impressive or if the manager just happened to be the Don Draper of technical pitches.

Context Collapse in Action: Just as your social media post can be misinterpreted by different audiences, an engineer's achievements can be misunderstood when presented to managers from different disciplines in a calibration setting.

Some Dimensions of Context Distortion

1. Domain-Specific Blind Spots

Each manager brings a specialty: frontend, backend, data engineering, SRE. But the breadth of expertise doesn't magically expand during calibration. It dilutes.

Let me walk through a real example:

Feedback Statement: "They built an offline-first feature for our mobile app."

What Really Happened:

- Implemented local caching with sync conflict resolution

- Designed a retry mechanism resilient to flaky networks

- Balanced battery usage with background sync strategies

- Had to navigate OS-level limitations across Android & iOS

- Coordinated with backend to expose sync-safe APIs

But in Calibration…

Backend Manager: "Sounds like a client-side wrapper around the API. Not too complex." (Context: They're used to thinking in terms of data pipelines, distributed systems.)

Frontend Lead: "We've done offline-ish stuff with service workers; is it really that different?" (Context: Service workers do help, but they don't mirror the complexities of native mobile.)

Data Engineering Director: "Is this work even measurable in business impact? Seems like an edge case." (Context: Their world runs on dashboards and batch jobs, not end-user latency or offline UX.)

SRE Manager: "Did this touch any infra scalability or reliability? Otherwise seems narrow." (Context: They look for load, system health, and incident risk.)

Outcome: The actual technical lift is flattened, possibly under-leveled, or not championed, simply because:

- The right vocabulary wasn't used for a cross-disciplinary audience

- The value wasn't translated into universally legible terms

- The audience's mental models didn't match the engineer's domain

2. Technology-Specific Bias

Even within the same domain, technologies and environments vary wildly. If you've never built a streaming pipeline, you might assume that adding a real-time aggregator is a trivial "few lines of code" job, missing the nuances of scaling, cost optimization, and many other factors.

I once heard a senior leader dismiss weeks of migration work as "just moving some yaml files around." The room went quiet. Nobody corrected them immediately. That silence? That's your engineering culture dying a little.

Here's another example that illustrates this point:

The "Simple" Database Migration

A backend engineer spent three months carefully migrating a critical system from MongoDb to PostgreSQL. Their work included:

- Creating complex data transformation pipelines

- Ensuring zero-downtime migration with dual-write systems

- Building comprehensive validation tooling

- Addressing performance regressions through query optimization

- Implementing new monitoring systems

During calibration, a frontend-focused engineering director summarized it as: "They moved data from one database to another. Isn't that mostly automated these days?"

This statement revealed their mental model of databases as interchangeable storage bins rather than complex ecosystems with distinct behaviors, constraints, and failure modes. The complexity of the migration - which prevented a potential business-halting disaster - was completely lost in translation.

3. Visibility Bias

A brand-new UI redesign gets screenshots, demos, and executive attention. A behind-the-scenes reliability improvement gets a single bullet point: "Reduced incidents by 40%."

The engineer who eliminates persistent on-call issues might save the company millions in prevented outages, but their manager gets 30 seconds to explain why this matters. Meanwhile, someone who built a flashy dashboard that executives see daily gets minutes of discussion and universal praise.

4. The Advocacy Lottery

Let's also be honest, your rating partially depends on how well your manager plays the game. A manager skilled at storytelling can transform "fixed some bugs" into "systematically eliminated critical customer experience issues through deep technical analysis and innovative solutions."

Two engineers with identical performance but different managers could walk away with completely different ratings. It’s like two equally skilled engineers walk into calibration: one gets a TED Talk intro, the other’s stuck whispering their story around the water cooler.

5. Anchoring and the Quiet Compliance

The first strong opinion in the room becomes gravity, everything else orbits around it. If a senior director says, "I'm not sure that qualifies as 'Exceeds Expectations,'" watch half the room suddenly discover fascinating patterns in the carpet.

I've seen managers walk in with one rating in mind, then gradually shift position as the winds of consensus blow through the room. We've a herd mentality and challenging the herd's emerging consensus feels dangerous, even when we know better.

6. Fifty Shades of "Meets Expectations"

Every manager interprets rating categories differently. One manager's "Exceeds Expectations" is another's "Tuesday." Some managers hold a bar so high that Einstein would barely scrape a "Meets," while others hand out "Exceeds" like conference swag.

In calibration, these philosophies collide in real-time. Engineers on different teams effectively play by different rules, yet get judged on a single scale that nobody fully agrees on.

7. The Time Squeeze

With so many managers, and so many engineers to discuss, and a limited duration meeting, you get a couple of minutes per person. A year's worth of complex infrastructure work becomes: "They improved latency." There isn't time to explain how, or why it was difficult, or how it enabled three new product features.

In my head, it translates to reading and understanding a novel by reading only the chapter titles. We reduce the complex work done by complex humans to bullet points and sound bites.

8. Misalignment on Growth vs. Impact

Some managers heavily weigh an engineer’s growth trajectory - how much they improved or learned - while others look primarily for direct impact on key business metrics. In calibration, these philosophies clash.

Engineer X joined mid-year, quickly ramped up, and delivered a successful pilot project. Engineer Y has been around for years, sustaining critical features that keep revenue flowing. Which is more valuable? Calibrations can devolve into philosophical debates about what matters most: raw impact or upward trajectory - leading to confusion and uneven outcomes.

9. The Game Theory of Manager Behaviour

Perhaps most insidious is how calibration creates systemic patterns of behavior among managers that further distort the process. Through the lens of systems thinking, we can see how the structure of the system incentivizes particular behaviors:

Rating Inflation: Some managers systematically rate their engineers one level higher than they truly believe is deserved. They know their ratings will likely be negotiated downward in calibration, so they start high as a buffer. For example, rating someone as "Exceeding Expectations" when they privately believe the engineer is solidly "Meeting Expectations." This creates an arms race of inflation that makes honest assessment nearly impossible.

Strategic Timing: Savvy managers learn to game the promotion cycle itself. They'll put someone up for promotion a cycle earlier than merited (e.g., mid-year rather than end-year), knowing that even if the engineer doesn't get promoted now, they'll be first in line for the next cycle—when they would have actually been ready anyway. This converts the calibration system into a strategic game rather than an honest assessment process.

Stockpiling "Evidence": Some managers withhold constructive feedback throughout the year, instead collecting it as ammunition for calibration. They weaponize what should be development opportunities, turning them into retroactive justifications for ratings. This prevents engineers from addressing issues when they occur and creates harmful surprises during review time.

These behaviors aren't due to "bad managers" but rather emerge naturally from the system's structure and incentives. When managers are evaluated on how their teams perform in calibration, we shouldn't be surprised when they optimize for the game rather than the intended purpose.

The Real Cost

The cost is more than stressful meetings or hurt feelings.

- Talent Disillusionment: Engineers who feel misjudged lose trust in the system. Over time, these high-value contributors quietly update their LinkedIn profiles.

- Biased Career Trajectories: Likeability and presentational flair become unfair catalysts for career growth. Meanwhile, methodical, behind-the-scenes contributors wonder why they bothered.

- Eroded Manager Credibility: When your engineers realize you're more focused on crafting narratives than giving accurate feedback, you've lost something precious.

- Organizational Blind Spots: Calibration could surface systemic issues—resource gaps, skill distribution, emerging team bottlenecks. But that's rarely the focus.

Key Insight: Context collapse doesn't just hurt individual careers, it can hurt an entire engineering culture by rewarding the wrong behaviors and missing opportunities for organizational learning.⠀

Breaking the Cycle

So, how do we fix this mess? The real question might be: Do we need to fix calibration, or do we need to rethink it entirely?

Here are some approaches to consider, organized by the problems they address:

Addressing Domain and Technical Biases

- Create domain-specific calibrations: Instead of one massive session, hold smaller, domain-focused discussions where the right experts can assess the right work.

- Implement cross-functional pre-reviews: Before formal calibration, have engineers' work reviewed by peers from different domains who can translate achievements into their own context.

Improving Context Preservation

- Engineer voice and co-authorship: Let engineers co-author their performance narratives, clarifying technical accomplishments and domain challenges.

- Standardized achievement formats: Create templates that ensure consistent representation of accomplishments across teams, reducing the impact of presentation skills.

Recognizing Invisible Contributions

- Dedicated recognition tracks: Create specific recognition categories for reliability, mentoring, documentation, and other hard-to-quantify contributions.

- Impact storytelling workshops: Train managers on how to effectively communicate the impact of invisible work.

Making Calibration More Continuous

- Implement ongoing calibration: Replace the year-end theater with quarterly check-ins to prevent recency bias and allow for more accurate, real-time insights.

- Decouple feedback from evaluation: Create systems where managers give frequent, developmental feedback that isn't directly tied to performance reviews, reducing the incentive to "save" feedback for calibration.

Addressing Systemic Gaming

- Audit for inflation patterns: If you're a senior leader, review patterns of rating inflation across teams and address systemic issues rather than focusing only on individual cases.

- Create safe failure paths for promotions: Design promotion processes where "not yet" outcomes don't penalize the engineer or manager, reducing the incentive for strategic timing games.

- Focus on values over outcomes: Evaluate managers not just on team performance but on how well they embody the feedback and development values you want to see (rather than how they play the calibration game).

Systems Thinking Perspective: As Donella Meadows would say, "Don't blame the players, change the game." Rather than trying to stop managers from gaming the system, redesign the system so gaming behaviors don't pay off.

Try This: At your next calibration meeting, ask managers to explain one achievement from each of their reports in terms that would make sense to someone completely outside their domain. This simple exercise can highlight how much context is typically lost.

Get comfortable with Nuance

Calibration is supposed to be our guardrail against subjectivity, an institutional mechanism for fairness. But in its current form, it often does the opposite, oversimplifying complex work into neat, stack-ranked narratives.

The good news? We have the power to stop flattening our engineers into bullet points.

The first step is acknowledging the inherent complexity of engineering work, and that complexity can't be compressed into a half-hour discussion. We need processes that welcome nuance, encourage deeper conversations, and reward the truly important work that might otherwise go unnoticed.

Just as we've become more aware of how context collapse affects our social media presence, we need to recognize how it undermines our organizational processes.

The parallels are striking:

| Social Media Context Collapse | Calibration Context Collapse |

|---|---|

| Different audiences interpret the same post differently | Different managers interpret the same work differently |

| Personal posts are seen by professional contacts | Technical work is judged by non-technical criteria |

| Message loses nuance as it crosses contexts | Work loses complexity as it's summarized |

| The loudest, most controversial content gains attention | The flashiest, most presentable work gains recognition |

| Users learn to game algorithms for visibility | Managers learn to game calibration for better outcomes |

Because if organizations only celebrate what's flashy, what's easy to talk about, or what can be pitched in 60 seconds, we'll end up with a team of great pitchers, and possibly mediocre builders.

Let's aim higher. Let's build a culture that sees the full context, not just the headlines.

Final Thought: If you're sitting in a calibration meeting next quarter, ask yourself two questions: "Am I capturing the real story of this engineer's work, or just the story that fits neatly on a template?" and "Am I playing a game, or am I truly serving my team's growth?" The more we ask these questions, the closer we get to a calibration process that genuinely understands the people in our org, rather than collapsing their stories into a stack-ranked list.

Old School Burke Newsletter

Join the newsletter to receive the latest updates in your inbox.